一、前言

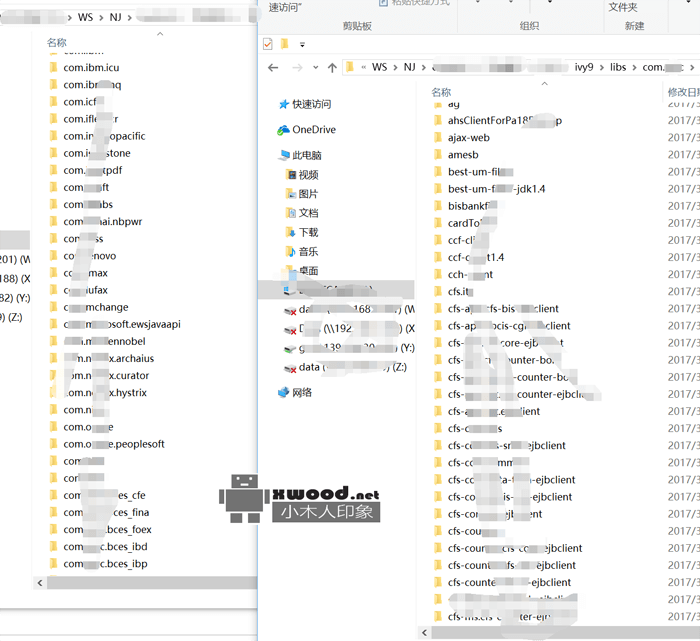

最近由于项目组网络安全比较严格,所有项目依赖包都是基于ivy的中心库,于是打算设计一个性能超强的网页爬虫Spider,能快速将内网44G大小的在线ivy或maven中心库文件全部离线下来,一方面对于技术深入研究,另一方面方便开发(不需要受限于公司内络环境),抓取效果如下图所示

二、实践分享

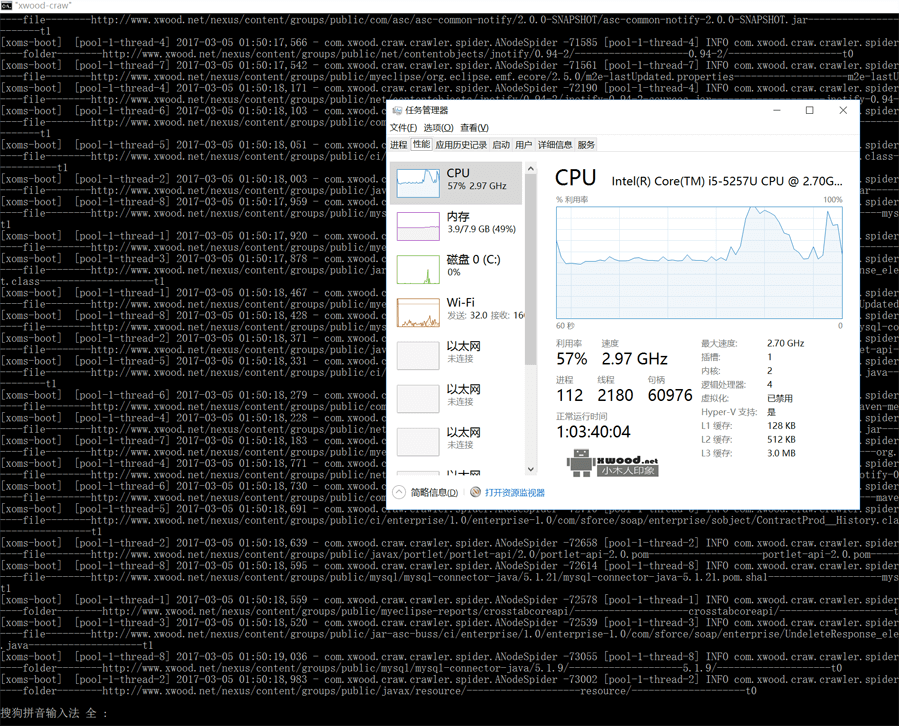

该爬虫主要基于HtmlUnit实现网页文档对象和DOM元素节点的解析,并在此基础上通过网页爬虫模型化设计、并模块间依赖关系进行解耦及通过CPU资源的合理调度降低网络下载和数据IO延时,实际运行效果如下

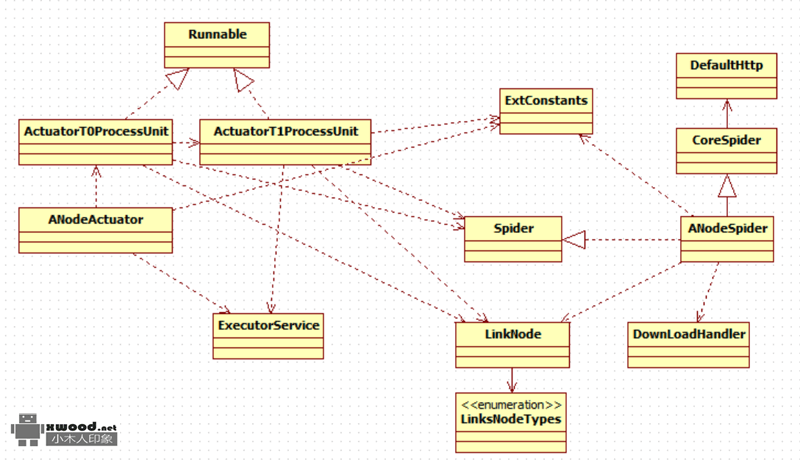

1. 设计类图

2.主要类源码内容

ANodeActuator.java

package com.xwood.craw.crawler.parse;@b@@b@import java.util.List;@b@import java.util.concurrent.BlockingQueue;@b@import java.util.concurrent.ExecutorService;@b@import java.util.concurrent.Executors;@b@import java.util.concurrent.LinkedBlockingQueue;@b@import java.util.concurrent.ScheduledExecutorService;@b@import java.util.concurrent.TimeUnit;@b@import java.util.concurrent.locks.Condition;@b@import java.util.concurrent.locks.Lock;@b@import java.util.concurrent.locks.ReentrantLock;@b@@b@import org.apache.log4j.Logger;@b@@b@import com.xwood.craw.crawler.spider.ANodeSpider;@b@import com.xwood.craw.crawler.spider.ExtConstants;@b@import com.xwood.craw.crawler.spider.LinkNode;@b@import com.xwood.craw.crawler.spider.Spider;@b@@b@public class ANodeActuator{@b@ @b@ public static BlockingQueue<LinkNode> processT0TaskQueue = new LinkedBlockingQueue<LinkNode>(1);@b@ @b@ public static BlockingQueue<LinkNode> processT1TaskQueue = new LinkedBlockingQueue<LinkNode>(1);@b@ @b@ public static ExecutorService executor = Executors.newFixedThreadPool(ExtConstants.threadSize);@b@ @b@ private static ScheduledExecutorService scheduleExecutorService=Executors.newScheduledThreadPool(4);@b@ @b@ private static final Lock lock = new ReentrantLock();@b@ @b@ public static final Condition sync = lock.newCondition();@b@ @b@ private static Logger logger = Logger.getLogger(ANodeActuator.class);@b@ @b@ private static long initialDelay4f = 30000; // 初始延迟;毫秒为单位@b@ @b@ private static long delay4f = 1000; // 周期间隔;毫秒为单位@b@ @b@ @b@ static{@b@ processT0TaskQueue = new LinkedBlockingQueue<LinkNode>(ExtConstants.queue_size);@b@ processT1TaskQueue = new LinkedBlockingQueue<LinkNode>(ExtConstants.queue_size);@b@ }@b@ @b@ public static void execute(){@b@ @b@ Spider spider=new ANodeSpider();@b@ @b@ List<LinkNode> spider_datas=spider.getNodes(ExtConstants.indexUrl);@b@ @b@ int _lnsize=0;@b@ @b@ for(LinkNode _lnode_a:spider_datas){@b@ @b@ executor.submit(new ActuatorT0ProcessUnit(_lnode_a));@b@ @b@ _lnsize++;@b@ @b@ logger.info("【ActuatorT0Process执行器"+_lnsize+"执行中................】");@b@ }@b@ @b@ scheduleExecutorService.scheduleWithFixedDelay(new ActuatorT1ProcessUnit(), initialDelay4f, delay4f, TimeUnit.MILLISECONDS);@b@ @b@ }@b@@b@}ActuatorT0ProcessUnit.java

package com.xwood.craw.crawler.parse;@b@@b@import java.util.List;@b@@b@import org.apache.log4j.Logger;@b@@b@import com.xwood.craw.crawler.spider.ANodeSpider;@b@import com.xwood.craw.crawler.spider.LinkNode;@b@@b@public class ActuatorT0ProcessUnit implements Runnable {@b@@b@ private LinkNode threadLinkNode;@b@ @b@ protected Logger logger = Logger.getLogger(this.getClass().getName());@b@ @b@ @b@ public ActuatorT0ProcessUnit(LinkNode threadLinkNode) {@b@ super();@b@ this.threadLinkNode = threadLinkNode;@b@ }@b@@b@ @Override@b@ public void run() {@b@ @b@ List<LinkNode> addLinkNodes=new ANodeSpider(threadLinkNode).getT1Nodes(threadLinkNode);@b@ @b@ ANodeActuator.processT1TaskQueue.addAll(addLinkNodes);@b@ @b@ logger.info("【processT1TaskQueue addAll:"+addLinkNodes+"】 --------------->total size:"+ANodeActuator.processT1TaskQueue.size());@b@ }@b@}ActuatorT1ProcessUnit.java

package com.xwood.craw.crawler.parse;@b@@b@import java.util.concurrent.ExecutorService;@b@import java.util.concurrent.Executors;@b@@b@import org.apache.log4j.Logger;@b@@b@import com.xwood.craw.crawler.spider.ANodeSpider;@b@import com.xwood.craw.crawler.spider.ExtConstants;@b@@b@public class ActuatorT1ProcessUnit implements Runnable{@b@ @b@ private static ExecutorService unit_executor = Executors.newFixedThreadPool(1);@b@ @b@ private Logger logger = Logger.getLogger(ActuatorT1ProcessUnit.class);@b@ @b@ private static volatile long pc=0;@b@ @b@ private static boolean isRunning=false;@b@@b@ @Override@b@ public void run() {@b@ @b@ int _threadSize=1;@b@ @b@ if(ANodeActuator.processT1TaskQueue.size()-pc<30){@b@ isRunning=true;@b@ }@b@ @b@ if(ANodeActuator.processT1TaskQueue.size()-pc<10){@b@ _threadSize=2;@b@ isRunning=true;@b@ }@b@ @b@ if(ANodeActuator.processT1TaskQueue.size()-pc<1){@b@ _threadSize=ExtConstants.threadSize;@b@ isRunning=true;@b@ }@b@ @b@ if(ANodeActuator.processT1TaskQueue.size()-pc>=50){@b@ _threadSize=1;@b@ isRunning=false;@b@ }@b@ @b@ unit_executor = Executors.newFixedThreadPool(_threadSize);@b@ @b@ pc=ANodeActuator.processT1TaskQueue.size();@b@ @b@ logger.info("【ActuatorDownLoadProcessUnit执行器 isRunning:"+isRunning+"@_threadSize:"+_threadSize+"...............】");@b@ @b@ while(isRunning&&!ANodeActuator.processT1TaskQueue.isEmpty()){@b@ @b@ Runnable handler=new Runnable() {@b@ @b@ @Override@b@ public void run() {@b@ @b@ new ANodeSpider(ANodeActuator.processT1TaskQueue.poll()).downloadByLinkNode();@b@ logger.info("【ActuatorDownLoadProcessUnit isRunning:"+isRunning+".@"+ANodeActuator.processT1TaskQueue+"】");@b@ @b@ }@b@ };@b@ @b@ unit_executor.submit(handler);@b@ @b@ }@b@ @b@ @b@ }@b@@b@}ANodeSpider.java

package com.xwood.craw.crawler.spider;@b@@b@import java.util.List;@b@import java.util.concurrent.CopyOnWriteArrayList;@b@@b@import org.jsoup.Jsoup;@b@import org.jsoup.nodes.Document;@b@import org.jsoup.nodes.Element;@b@import org.jsoup.select.Elements;@b@@b@public class ANodeSpider extends CoreSpider implements Spider{@b@ @b@ protected String click_link;@b@ @b@ protected LinkNode click_node;@b@ @b@ protected List<LinkNode> click_nodes;@b@ @b@ @b@ public ANodeSpider() {@b@ super();@b@ this.click_nodes=new CopyOnWriteArrayList<LinkNode>();@b@ }@b@ @b@ @b@@b@@b@ public ANodeSpider(LinkNode click_node) {@b@ super();@b@ this.click_node = click_node;@b@ this.click_nodes=new CopyOnWriteArrayList<LinkNode>();@b@ }@b@@b@@b@@b@@b@ public ANodeSpider(String click_link, LinkNode click_node) {@b@ super();@b@ this.click_link = click_link;@b@ this.click_node = click_node;@b@ this.click_nodes=new CopyOnWriteArrayList<LinkNode>();@b@ }@b@ @b@@b@@b@ public void download(){@b@ download(click_node);@b@ }@b@ @b@ public void download(String alink){@b@ @b@ getT1Nodes(alink);@b@ @b@ download();@b@ }@b@ @b@ public void downloadByLinkNode(List<LinkNode> dnodes){@b@ for(LinkNode ln:dnodes){@b@ if(ln.get_type()==LinksNodeTypes.t0)@b@ continue;@b@ downloadByLinkNode(ln);@b@ }@b@ }@b@ @b@ public void downloadByLinkNode(){@b@ downloadByLinkNode(this.click_node);@b@ }@b@ @b@ public void downloadByLinkNode(LinkNode dnode){@b@ if(dnode.get_type()==LinksNodeTypes.t0)@b@ return;@b@ new DownLoadHandler(ExtConstants.link_a_href_domain+dnode.get_href(),ExtConstants.out_path+dnode.get_absoulte_path()).execute();@b@ @b@ }@b@ @b@ public void download(LinkNode click_node){@b@ @b@ getT1Nodes(click_node);@b@ @b@ for(LinkNode _n:click_nodes){@b@ if(_n.get_type()==LinksNodeTypes.t0)@b@ continue;@b@ new DownLoadHandler(ExtConstants.link_a_href_domain+_n.get_href(),ExtConstants.out_path+_n.get_absoulte_path()).execute();@b@ }@b@ @b@ this.click_nodes=new CopyOnWriteArrayList<LinkNode>();@b@ }@b@ @b@ @b@ public List<LinkNode> getNodes(){@b@ return getNodes(click_link);@b@ }@b@ @b@ public List<LinkNode> getNodes(String alink){@b@ try {@b@ @b@ String response = httpclient.doGet(alink).asHtml();@b@ Document doc = Jsoup.parse(response,ExtConstants.defaut_gcode);@b@ @b@ Elements es = doc.select(ExtConstants.default_node);@b@ for(Element e:es){@b@ @b@ LinkNode _add_node=LinkNode.transfer(new LinkNode(e.text(),e.attr(ExtConstants.default_node_attribute)));@b@ @b@ if(_add_node.get_href()==null||"".equalsIgnoreCase(_add_node.get_href()))@b@ continue;@b@ @b@ if(!ExtConstants.getPermission(_add_node.get_href()))@b@ continue;@b@ @b@ click_nodes.add(_add_node);@b@ @b@ logger.info("getNodes@"+_add_node.toString());@b@ }@b@ } catch (Exception e) {@b@ }@b@ return click_nodes;@b@ @b@ }@b@ @b@ public List<LinkNode> getT0Nodes(){@b@ return getT0Nodes(this.click_node);@b@ }@b@ @b@ public List<LinkNode> getT0Nodes(String click_link){@b@ @b@ List<LinkNode> _click_nodes=new CopyOnWriteArrayList<LinkNode>();@b@ @b@ _click_nodes=getNodes(click_link);@b@ @b@ for(LinkNode _cn:_click_nodes){@b@ getT0Nodes(_cn);@b@ }@b@ @b@ return click_nodes;@b@ }@b@ @b@ public List<LinkNode> getT0Nodes(LinkNode _node){@b@ try {@b@ @b@ @b@ @b@ String response = httpclient.doGet(ExtConstants.link_a_href_domain+_node.get_href()).asHtml();@b@ Document doc = Jsoup.parse(response,ExtConstants.defaut_gcode);@b@ Elements es = doc.select(ExtConstants.default_node);@b@ for(Element e:es){@b@ @b@ LinkNode _add_node=LinkNode.transfer(new LinkNode(e.text(),e.attr(ExtConstants.default_node_attribute)));@b@ @b@ if(_add_node.get_href()==null||"".equalsIgnoreCase(_add_node.get_href()))@b@ continue;@b@ @b@ if(!ExtConstants.getPermission(_add_node.get_href()))@b@ continue;@b@ @b@ if(_add_node.get_type()==LinksNodeTypes.t1){@b@ logger.info(Thread.currentThread().toString()+"click------file--------"+_add_node.toString());@b@ getT0Nodes(_add_node);@b@ }else{@b@ logger.info(Thread.currentThread().toString()+"click------folder--------"+_add_node.toString());@b@ if(!click_nodes.contains(_add_node))@b@ click_nodes.add(_add_node);@b@ }@b@ @b@ @b@ }@b@ @b@ } catch (Exception e) {@b@ }@b@ return click_nodes;@b@ }@b@ @b@ @b@ public List<LinkNode> getT1Nodes(){@b@ return getT1Nodes(click_node);@b@ @b@ }@b@ @b@ public List<LinkNode> getT1Nodes(String click_link){@b@ @b@ List<LinkNode> _click_nodes=new CopyOnWriteArrayList<LinkNode>();@b@ @b@ _click_nodes=getNodes(click_link);@b@ @b@ for(LinkNode _cn:_click_nodes){@b@ getT1Nodes(_cn);@b@ }@b@ @b@ return click_nodes;@b@ }@b@ @b@ @b@ @b@ public List<LinkNode> getT1Nodes(LinkNode _node){@b@ try {@b@ String response = httpclient.doGet(ExtConstants.link_a_href_domain+_node.get_href()).asHtml();@b@ Document doc = Jsoup.parse(response,ExtConstants.defaut_gcode);@b@ Elements es = doc.select(ExtConstants.default_node);@b@ for(Element e:es){@b@ @b@ LinkNode _add_node=LinkNode.transfer(new LinkNode(e.text(),e.attr(ExtConstants.default_node_attribute)));@b@ @b@ if(_add_node.get_href()==null||"".equalsIgnoreCase(_add_node.get_href()))@b@ continue;@b@ @b@ if(!ExtConstants.getPermission(_add_node.get_href()))@b@ continue;@b@ @b@ if(_add_node.get_type()==LinksNodeTypes.t0){@b@ logger.info(Thread.currentThread().toString()+"click------folder--------"+_add_node.toString());@b@ getT1Nodes(_add_node);@b@ }else{@b@ logger.info(Thread.currentThread().toString()+"click------file--------"+_add_node.toString());@b@ if(!click_nodes.contains(_add_node))@b@ click_nodes.add(_add_node);@b@ }@b@ @b@ @b@ }@b@ @b@ } catch (Exception e) {@b@ }@b@ return click_nodes;@b@ }@b@ @b@ @b@ public static void main(String[] args){@b@ new ANodeSpider().download(ExtConstants.indexUrl);@b@ }@b@ @b@@b@}三、软件下载

xwood-craw-v1.3.5版本下载

���